Shang-Yi Chuang

ML Researcher | ASR R&D

Apple | Siri

Profile

Shang-Yi Chuang is a Machine Learning Researcher about Automatic Speech Recognition at the Siri team of Apple. She received her master’s degree in Computer Science from Cornell Tech. She has experience in international collaborations in the United States, Japan, and Taiwan.

Prior to Cornell Tech, she was working on artificial intelligence at Academia Sinica, Taiwan. Her research interests include natural language processing, speech processing, and computer vision. She collaborated with Prof. Yu Tsao and Prof. Hsin-Min Wang on audio-visual multimodal learning and data compression on speech enhancement. She was engaged in a project of a cross-lingual question answering system with Prof. Keh-Yih Su as well.

She was also conducting research about humanoid robots with Prof. Tomomichi Sugihara at Motor Intelligence, Osaka University. Her work was to improve the control system of a robot arm in order to create a safer and more comfortable human-robot coworking space.

- Speech Processing

- Natural Language Processing

- Computer Vision

- Multimodal Learning

-

M.Eng., Major in Computer Science, 2021 - 2022

Cornell Tech

-

B.S., Major in Mechanical Engineering, Minor in Electrical Engineering, 2012 - 2017

National Taiwan University

-

FrontierLab@OsakaU Program, 2016 - 2017

Osaka University

Experience

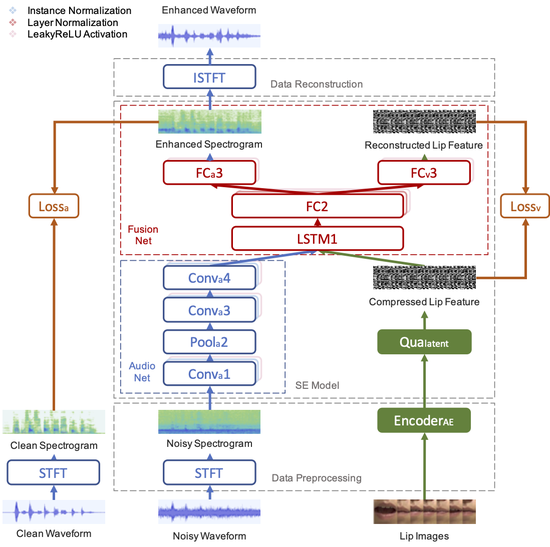

- Audio-Visual Multimodal Learning for On-device Systems

- Improved the system robustness against insufficient hardware or inferior sensors in a car-driving scenario by a data augmentation scheme

- Confirmed the effectiveness of lip images (both compressed and non-compressed) in speech enhancement tasks

- Minimized additional multimodal processing costs by applying an autoencoder and data quantization techniques while addressing privacy problems of facial data

- Significantly reduced the size of data to 0.33% without sacrificing the speech enhancement performance

- Cross-Lingual Movie QA (Question Answering) System

- Reduced unfavorable inequalities in technology caused by limited data in minority languages

- Applied transfer learning to a Mandarin system by incorporating translated corpus in dominant languages

- Achieved zero-shot learning on Mandarin movie QA tests by using pre-trained multilingual models

- EMA (Electromagnetic Midsagittal Articulography) Systems

- Designed silent speech for patients with vocal cord disorders or high-noise environments by joint training mel-spectrogram and deep feature loss

- Verified the effectiveness of the articulatory movement features of EMA in speech-related tasks

- Improved the character correct rate of automatic speech recognition by 30% in speech enhancement tasks

- Incorporated EMA into end-to-end speech synthesis systems and achieved 83% preference in subjective listening tests

- Self-Supervised Learning on Speech Enhancement

- Realized speech enhancement by applying a denoising autoencoder with a linear regression decoder

- Enhanced 43% of speech quality without limited intrusive paired data

- Potentially empowered the realization of unsupervised dereverberation

- Construction of Multimodal Datasets

- Highly addressed multimodal common problems of asynchronous devices

- Supervised crucial environment setups for collaborative labs, schools, and hospitals

- Published Taiwan Mandarin Speech with Video, an open source dataset including speech, video, and text

- Ported numerous existing systems from Keras, TensorFlow, and MATLAB into Pytorch and reduced processing costs by optimizing codes and cleansing data to Pytorch-friendly formats

- Published 5 papers including 1 top-notch IEEE/ACM journal and 4 conferences

- Took the initiative to be server manager, paper writing mentor, journal reviewer, and internship supervisor

- Unified spectral and prosodic feature enhancement

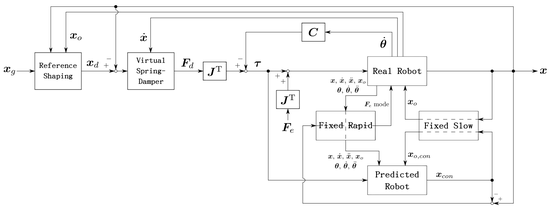

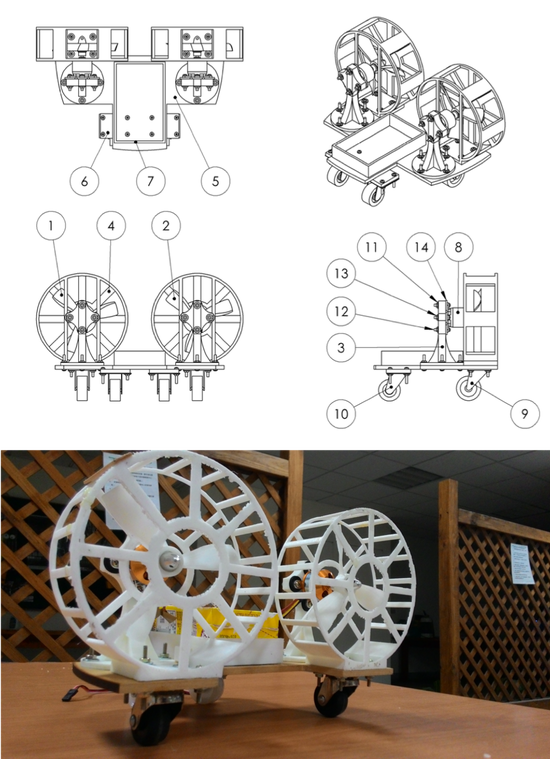

- Motion of Humanlike Robot Arms

- Sought a safer and more comfortable human-robot environment

- Imitated human behaviors by applying biological statistics results

- Smoothed the velocity profiles and trajectories of robot arms even when external forces exist

- Improved a manipulator’s ability to resume motion after external forces are removed

- Force detectors are unnecessary in the proposed control system

- Programming Tools of Control Theory

- Built a library of mechanics principles of kinematics and dynamics

Publications

Projects

DTS Stock Tracking

DTS is an API-based project that assists stock trading by providing registered users with stock prices and statistical analytics.

Going Everywhere

Going Everywhere is an NFT-based art project supported by the \Art Microgrant Award. A critical characteristic of blockchain is inerasability, and it can be leveraged to prove that something exists. The artist collected a set of photos of an identifier (Minccino) as a demonstration of where she existed across the physical world and launched the collection as an NFT. After putting the project on chain, the existence became eternal in the digital world.

Improved Lite Audio-Visual Speech Enhancement (iLAVSE)

iLAVSE is a deep-learning-based audio-visual project that addresses three practical issues often encountered in implementing AVSE systems, including the requirement for additional visual data, audio-visual asynchronization, and low-quality visual data.

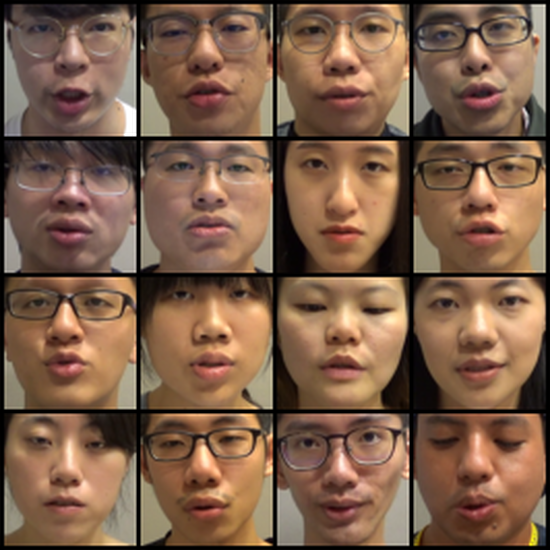

Taiwan Mandarin Speech with Video (TMSV)

TMSV is an audio-visual dataset based on the script of TMHINT (Taiwan Mandarin hearing in noise test).

Lite Audio-Visual Speech Enhancement (LAVSE)

LAVSE is a deep-learning-based audio-visual project that addresses additional processing costs and privacy problems.

Smooth and Flexible Movement Control of a Robot Arm

Realizing human behavior on a robot arm based on a nonlinear reference shaping controller in order to create robots which can share a safe working environment with humans.

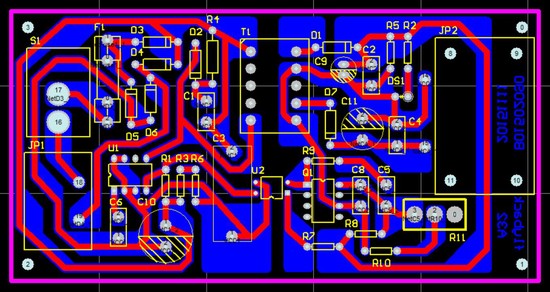

Flyback Converter

Practice of PCB designing and welding.

ME Robot Cup

The ME Robot Cup is a traditional annual event at the Department of Mechanical Engineering, NTU.

Awards & Honors

Skills

Native

Advanced

Advanced